Google's White Paper

In September last year the authors Julia Wiesinger, Patrick Marlow and Vladimir Vuskovic released a groundbreaking white paper called “Agents”. The white paper appeared on X.com in early January 2025 and has been trending since. It goes in a deep description of what an AI Agent is, how it is different than an AI model, and how you can get started with coding your own agent.

In this post, I will provide a concise analysis and summary of the paper, aiming to extract its key insights.

Key differences between AI Models and AI Agents

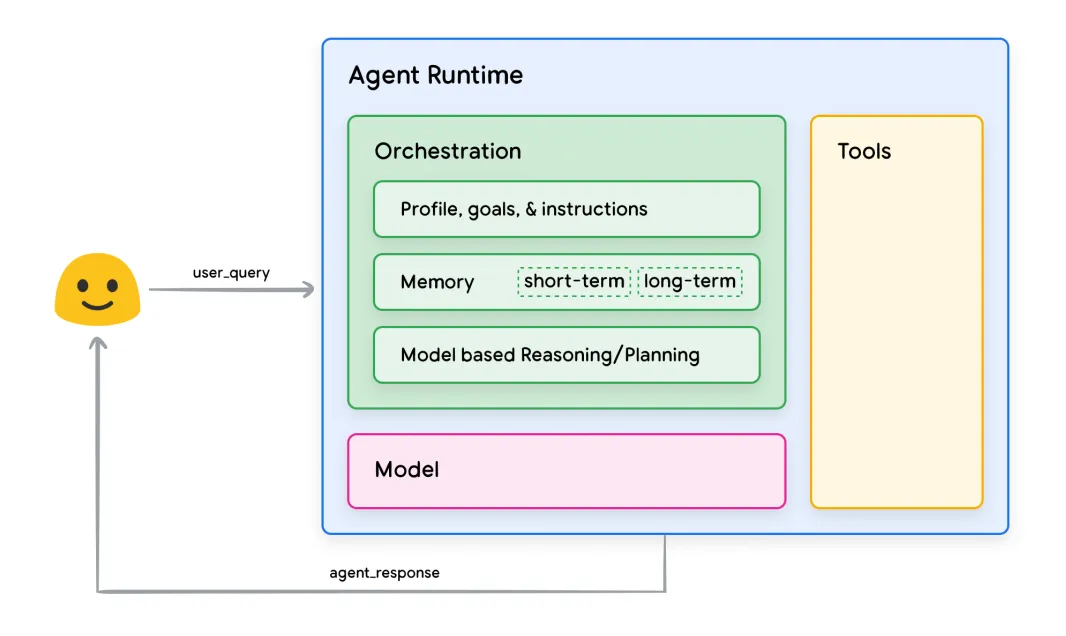

AI models and AI agents are distinct yet interconnected components in the AI landscape. Models, such as large language models (LLMs), are designed for generating responses or predictions based on their training data, while agents extend this functionality by acting autonomously to achieve specific goals. Agents integrate models with tools that enable interaction with external systems and environments, bridging the gap between static knowledge and dynamic real-world applications. They also feature an orchestration layer, allowing for iterative reasoning and multi-step problem-solving, unlike models that operate on single-turn predictions. These capabilities make agents adaptable, proactive, and effective in complex tasks.

Source: “Agents” by Julia Wiesinger, Patrick Marlow and Vladimir Vuskovic

Source: “Agents” by Julia Wiesinger, Patrick Marlow and Vladimir Vuskovic

Knowledge Scope

AI Model: Limited to training data.

AI Agent: Extends knowledge through external systems using tools.

Context Handling

AI Model: Single inference, lacks session history management.

AI Agent: Maintains session history for multi-turn interactions.

Tool Integration

AI Model: No native tool implementation.

AI Agent: Natively integrated tools for real-world interactions.

Reasoning Capability

AI Model: No native logic layer, relies on user-defined prompts.

AI Agent: Built-in cognitive architecture with reasoning frameworks.

How agents operate

Compared to AI models the agents are capable of long term reasoning. They create a plan of action, start executing it and refine the needed operations in a feedback loop after each step. This approach leads to better end results and mimics the process of a human working on a given task.

The white paper presents three types of such cognitive architectures which became established in the AI prompt engineering space:

- ReAct — combines reasoning and acting in an iterative process, enabling agents to use external tools or data sources dynamically while reasoning through tasks.

- Chain of Thought (CoT) — Facilitates step-by-step reasoning, allowing the agent to break down complex problems into sequential, logical steps to arrive at accurate conclusions.

- Tree of Thought (ToT) — Builds on CoT by exploring multiple reasoning pathways simultaneously, akin to decision trees, enabling the agent to evaluate and choose optimal solutions.

Overall research shows that the quality of AI model responses is highly dependent on their ability to reason, act, select appropriate tools, and utilize factual data effectively. This makes the agents crucial to AI improvement.

Connecting AI agents to the outside world via tools

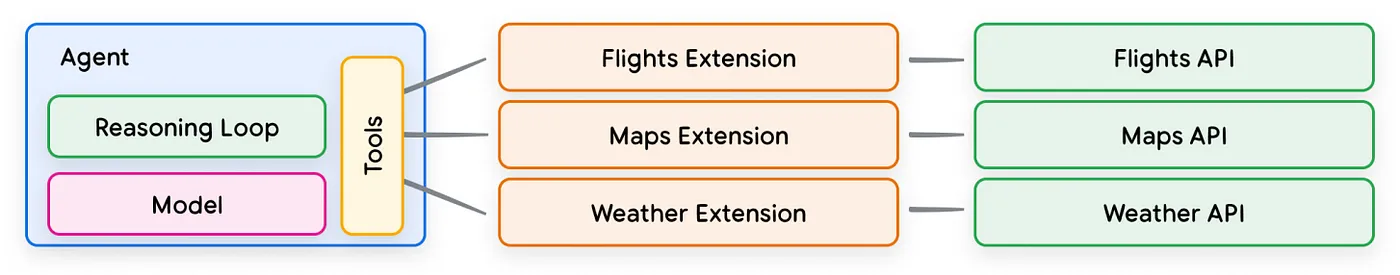

Language models are great at processing information but can’t directly interact with the real world, limiting their ability to handle tasks requiring real-time action or access to external systems. To address this, Google models currently use three main types of tools — extensions, functions, and data stores — extending their capabilities to both understand and act in the real world, unlocking new possibilities.

Extensions

Extensions act as a standardized bridge between agents and APIs, enabling agents to execute API calls seamlessly without relying on custom code. For example, in a flight booking scenario, instead of manually coding logic to parse user queries and handle errors, the agent dynamically selects the right Extension, like the Google Flights API, to retrieve relevant data. This flexibility allows agents to handle diverse tasks, such as booking flights or finding nearby coffee shops. Extensions are configured as part of the agent and dynamically applied during runtime, making them efficient and adaptable.

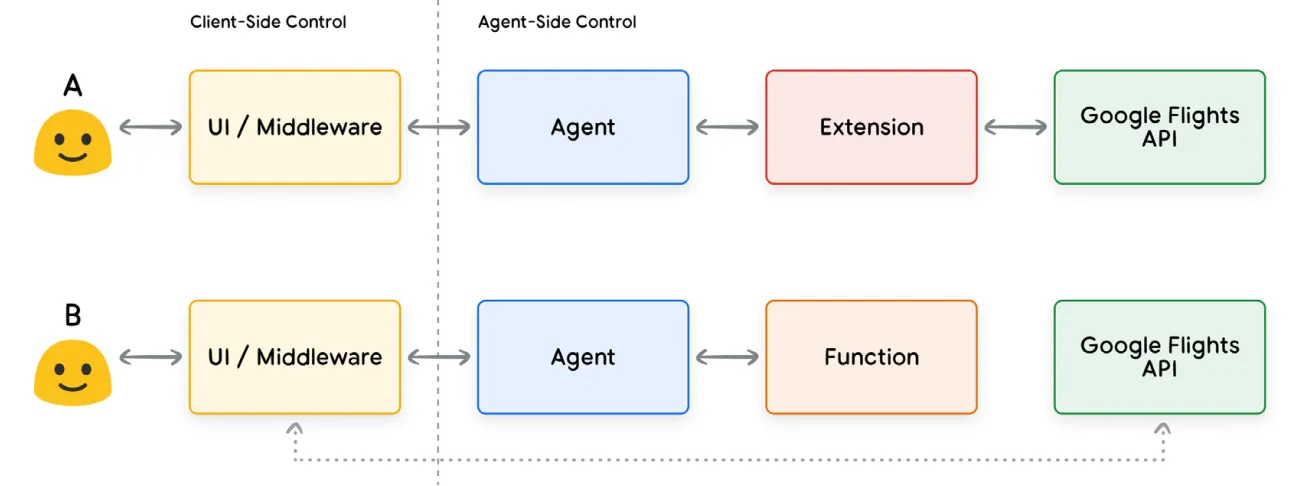

Functions

Functions in agents are reusable modules of code that perform specific tasks based on programming logic. However, unlike Extensions, Functions are executed on the client-side rather than within the agent. Functions are useful when API calls need to occur outside the agent architecture (e.g. in frontend systems) and security constraints prevent direct API access.

The difference between Extensions and Functions is very subtle but the decoupling of the logic execution from the agent side gives an additional room for experimentation to the developers.

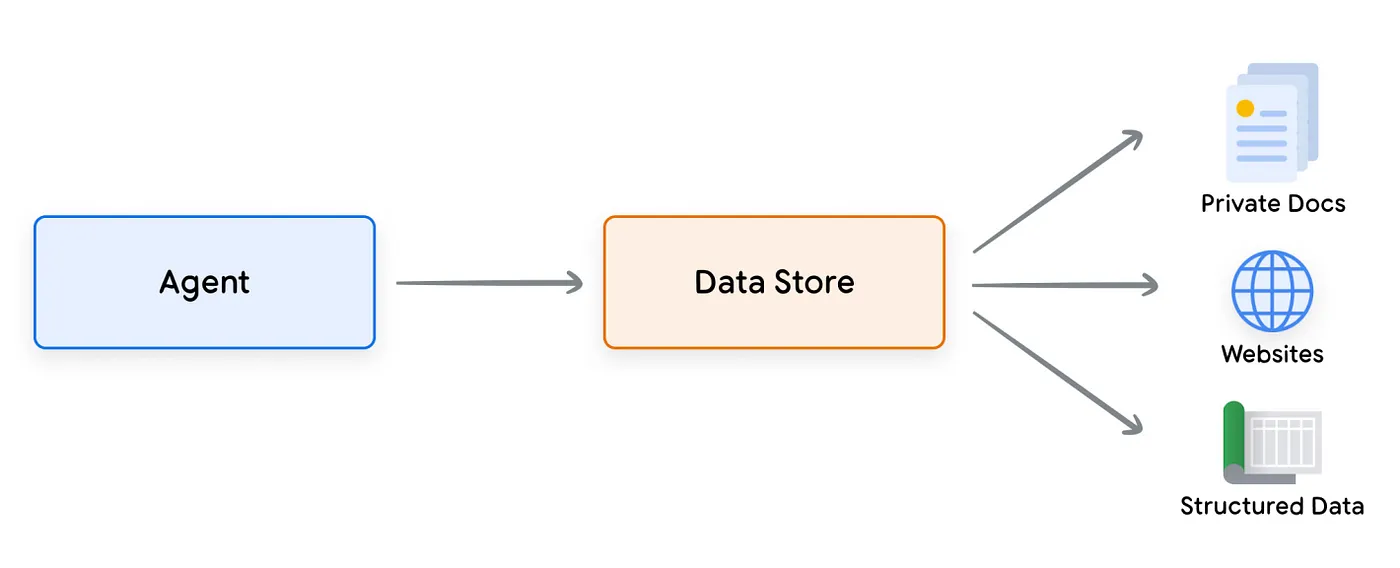

Data stores

Data Stores enable language models to access up-to-date, dynamic information, addressing the challenge of static training data. They allow developers to add data, such as PDFs or spreadsheets, directly into the agent’s workflow without needing retraining. The data is converted into vector embeddings, which the agent can use to enhance responses or actions. This is particularly useful in Retrieval Augmented Generation (RAG) applications, where agents can search and retrieve real-time content from sources like websites or structured documents. The process involves generating query embeddings, matching them against the data store, and using the retrieved content to inform the agent’s actions.

Summary

The “Agents” white paper explores the core components of Generative AI agents, focusing on how they extend language models by using tools to interact with real-time data, suggest actions, and complete complex tasks autonomously. Central to agent operations is the orchestration layer, which guides decision-making and action through reasoning techniques like ReAct, Chain-of-Thought, and Tree-of-Thought. Tools such as Extensions, Functions, and Data Stores allow agents to access external systems and knowledge, enhancing their capabilities. Looking ahead, agents will become increasingly sophisticated, solving more complex problems through agent chaining — combining specialized agents to tackle diverse tasks. Building effective agents requires an iterative approach, as each agent is unique, but leveraging these foundational components offers immense potential for impactful, real-world applications.